Key Points:

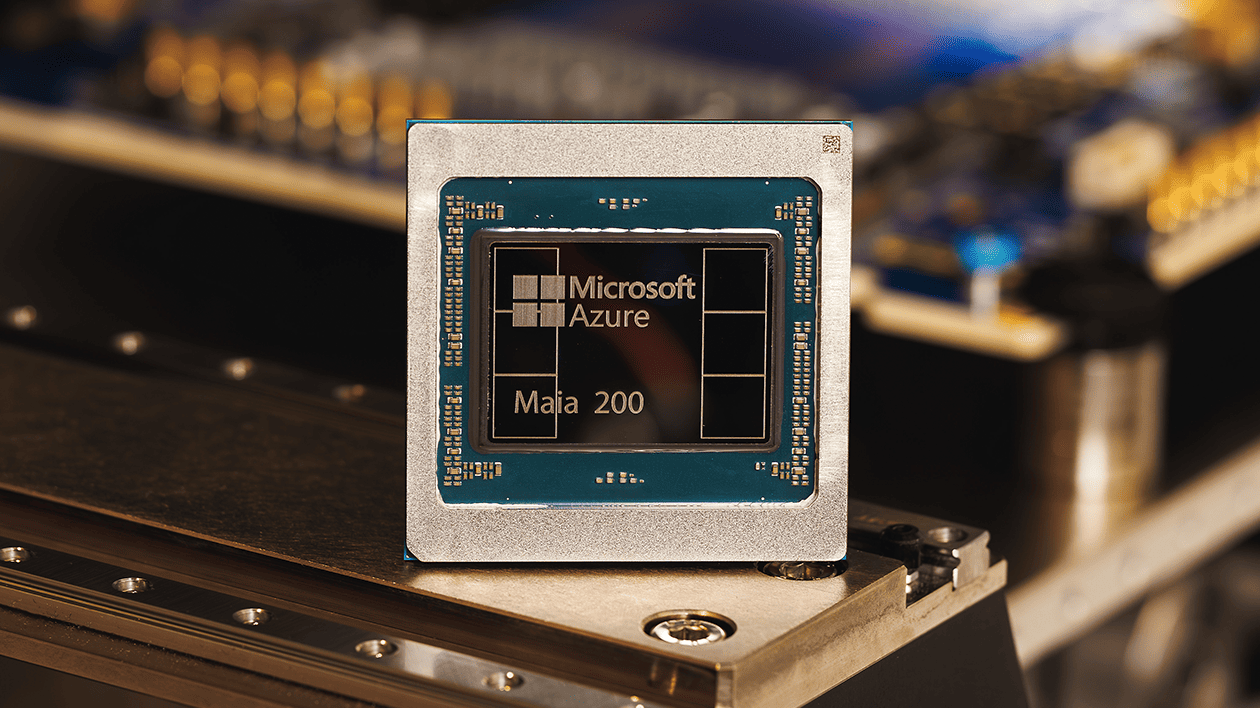

- Microsoft said its new AI chip, the Maia 200, offers 30% more performance than alternatives at the same price.

- The company started providing them in US data centers.

- The chips will be used by Microsoft’s internal AI team, as well as “Microsoft 365 Copilot” for commercial customers, with broader availability coming soon.

The 200, which follows the Maia 100 released in 2023, has been engineered to run powerful AI models at higher speeds and more efficiently, the company said. Maia has more than 100 billion transistors, delivering more than 10 petaflops of 4-bit precision and about 5 petaflops of 8-bit performance, significantly more than the previous version.

Inference refers to the computational process of running a model, not the computations required to train it. As AI companies mature, the cost of inference becomes an increasingly significant part of their overall operating costs, leading to renewed interest in ways to optimize the process.

Microsoft is hoping that the Maia 200 can be part of that optimization, making AI businesses run with less disruption and lower power use. “In practical terms, one Maia 200 node can effortlessly run today’s largest models, with plenty of headroom for even bigger models in the future,” the company said.

The chips are made using “Taiwan Semiconductor Manufacturing Co.’s” 3-nanometer process. Each server has four chips connected. They use Ethernet cables rather than the InfiniBand standard. NVIDIA sells InfiniBand switches after acquiring Mellanox in 2020.

Microsoft’s new chip is also part of a growing trend of tech giants choosing to build their own chips in an effort to reduce their reliance on NVIDIA, whose cutting-edge GPUs have become increasingly critical to the success of AI companies.

Google, for example, has its own TPUs, tensor processing units that aren’t sold as chips but as computing power available through the cloud.

There’s also Amazon’s Trainium, the e-commerce giant’s AI accelerator chip, which released its latest version, Trainium3, in December.

In both cases, TPUs can be used to offload some of the computing workload that would otherwise be assigned to NVIDIA GPUs, reducing the overall cost of the hardware.

With Maia, Microsoft is positioning itself to compete with these alternatives. In a press release on Monday, the company noted that Maia’s FP4 performance is three times faster than Amazon’s third-generation Trainium chips, and its FP8 performance is faster than Google’s seventh-generation TPUs.

Microsoft says “Maia” is already hard at work building the company’s AI models from its Superintelligence team. It’s also supporting its Copilot chatbot. On Monday, the company said it has invited a variety of parties, including developers, academics, and AI labs, to use its Maia 200 software development kit for their workloads.

Credits:

Image: