Google is launching Gemini 3 today, a new series of models that the company calls its “most intelligent” and “factually accurate” AI systems. It’s also an opportunity for Google to leapfrog OpenAI after the failed launch of GPT-5 and potentially put the company at the forefront of consumer-focused AI models.

“This is the first time we are shipping Gemini in Search on day one,” – says CEO Sundar Pichai.

Gemini 3 is rolling out via AI Mode, interface on the Google search engine. One standout feature is how Gemini 3 can display “immersive visual layouts and interactive tools and simulations, all generated completely on the fly based on your query.”

The new model will also be rolled out to all Gemini app users. Google is also promising an increase in the number of regular searches. For each query, the company plans to use Gemini 3 to perform “even more searches to discover relevant web content” that might have been missed before.

Additionally, the company announces that Gemini 3 will finally support AI-powered overviews, a Google search feature that automatically summarizes the answer to your query (for better or worse) at the top of the results page.

“In the coming weeks, we’re also enhancing our automatic model selection in Search with Gemini 3. This means Search will intelligently route your most challenging questions in AI Mode and AI Overviews to this frontier model-while continuing to use faster models for simpler tasks,” the company wrote in a separate blog post.

Tulsee Doshi, Google DeepMind’s senior director and head of product, says – the new model will bring the company closer to making information “universally accessible and useful” as its search engine continues to evolve.

“I think the one really big step in that direction is to step out of the paradigm of just text responses and to give you a much richer, more complete view of what you can actually see.”

Gemini 3 is “natively multimodal,” meaning it can process text, images, and audio all at once, rather than separately. For example, Google says Gemini 3 could be used to translate photos of recipes and then turn them into a cookbook, or to create interactive flashcards based on a series of video lectures.

The upgraded AI model will also enable “generative interfaces,” a tool Google is testing in Gemini Labs that allows Gemini 3 to create a visual, magazine-style format with pictures you can browse through, or a dynamic layout with a custom user interface tailored to your prompt.

The AI-powered Google Search feature – will similarly present you with visual elements, like images, tables, grids, and simulations based on your query.

It’s also capable of performing more searches using an upgraded version of Google’s “query fan-out technique,” which now not only breaks down questions into bits it can search for on your behalf, but is better at understanding intent to help “find new content that it may have previously missed,” according to Google’s announcement.

Company said, the Gemini 3 integration with AI Overviews will initially be limited to paid subscribers of Google’s AI plans. In the US, they can also use a more powerful Gemini 3 Pro model starting today via AI Mode by selecting the “Thinking” option from the model drop-down menu.

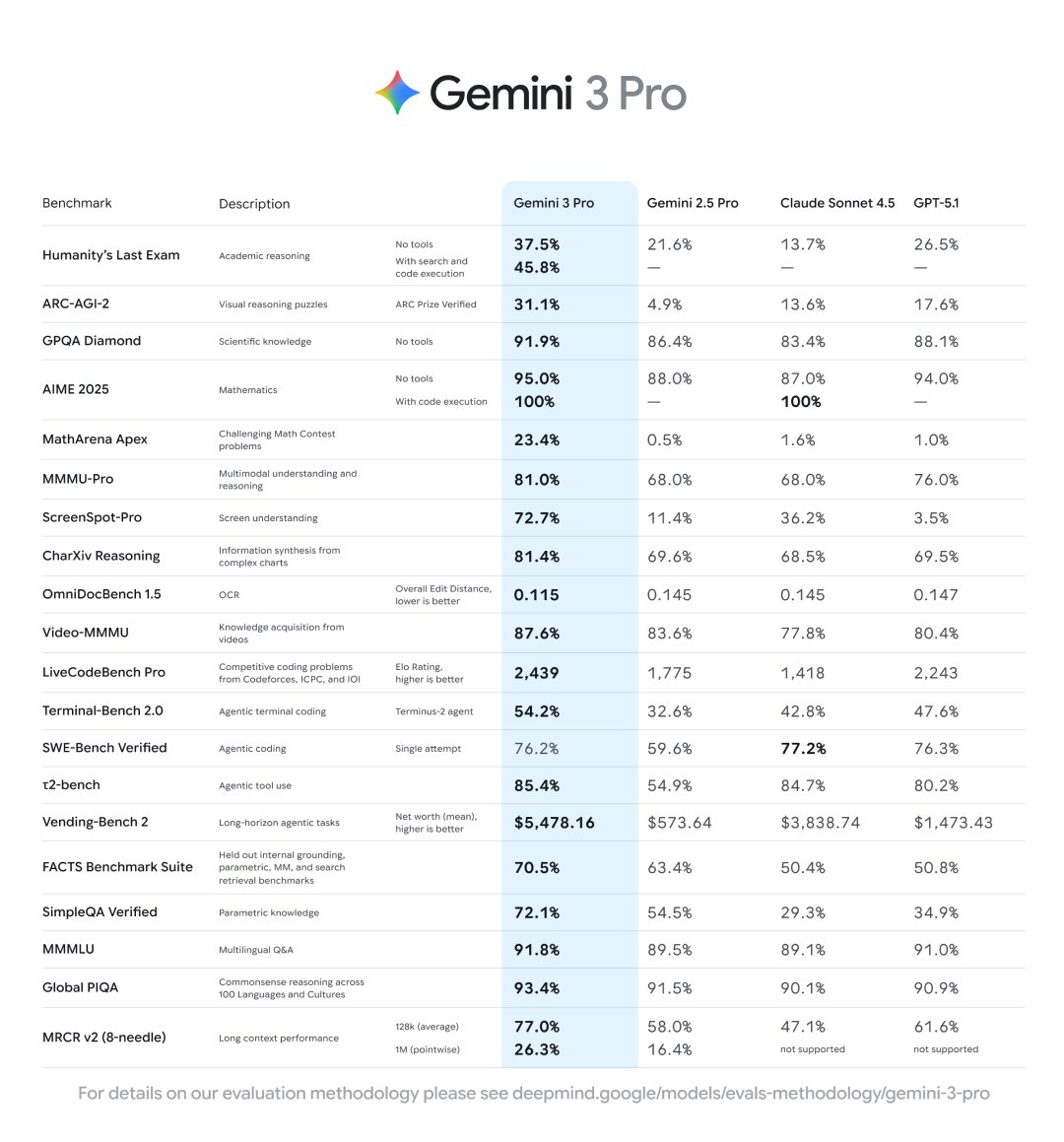

According to Google, the Gemini 3 model outperforms GPT 5.1 and Anthropic’s Claude Sonnet 4.5 across a wide range of AI-related benchmarks, including math, scientific reasoning, and multilingual questions and answers.

“It’s state-of-the-art in reasoning, built to grasp depth and nuance – whether it’s perceiving the subtle clues in a creative idea, or peeling apart the overlapping layers of a difficult problem,” – Pichai added.

In an effort to attract software developers, the company also announced Google Antigravity, a set of programming tools for artificial intelligence-driven computers that is apparently a response to competing coding programs from “OpenAI” and “Anthropic”.

“Using Gemini 3’s advanced reasoning, tool use, and agentic coding capabilities, Google Antigravity transforms AI assistance from a tool in a developer’s toolkit into an active partner,” Google’s CEO said. “Now, agents can autonomously plan and execute complex, end-to-end software tasks simultaneously on your behalf while validating their own code.”

Along with these improvements, Gemini 3 comes with better reasoning and agentic capabilities, allowing it to complete more complex tasks and “reliably plan ahead over longer horizons,” according to Google.

In addition, Google has developed an even smarter “Gemini 3 Deep Think mode.” But the company wants to be careful with its release. “We’re taking extra time for safety evaluations and input from safety testers before making it available to Google AI Ultra subscribers in the coming weeks,” – the company explained.

Credits: